Dangerous Content Is Winning on Instagram

How Meta can use A.I. to make Instagram a safer platform

September 19, 2024

Instagram used to be my happy place. After deleting my Twitter and Facebook accounts in the mid-late 2010s, I was thankful to still have Instagram and my few connections there. It was a place where people shared photos or videos of life's happy moments, art, toy photography (hell yeah) and more. I could engage with those I followed and those that followed me and it was a generally pleasant place compared to all other forms of social media.

Then along came TikTok.

In order to compete with the rising popularity of TikTok, Instagram unveiled the "Reel" which looked and felt exactly like the vertical videos from TikTok. For quite some time after the Reel feature launched, I could spot the TikTok logo in the bottom corner of most of the videos (that had to be embarrassing for them). Along with the addition of the Reel came Instagram's efforts to start curating content and pushing media from accounts we do not follow into our feeds and into our faces.

They placed a heavy emphasis on Reels and wanted to make sure they were getting as much exposure as possible. Suddenly, Reels from total strangers were unavoidable. Whether they showed up in our feeds or we were sending them to friends using the share feature, Reels seemed to become the dominant post type on the platform.

An unfortunate side effect of these changes to Instagram was the increase in troll behavior in comment sections. Our digital social circles and hobby communities were suddenly exposed to total strangers that didn't even share an interest in the content and like most trolls, they had to leave a comment to make sure the account owner knew that.

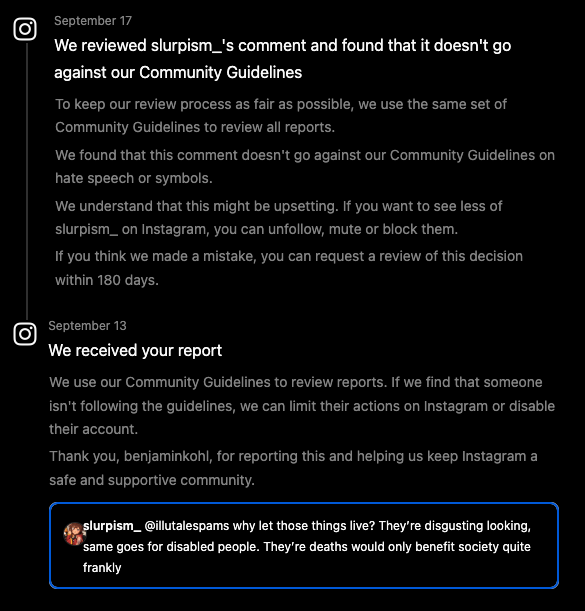

For the more despicable comments, Instagram provides a feature that allows users to report them if they feel the comments violate community guidelines. In the last few years, I have reported plenty of very nasty comments for violating Instagram's community guidelines on harassment, bullying, hate speech, threats, etc. In nearly every case, Instagram would respond by telling me they reviewed the comment and found it does not violate their community guidelines.

Recently, I came across the most heinous of comment threads where one user suggested that certain individuals, including people with disabilities, should be euthanized. I immediately reported the comments. A few days later, I got the usual response from Instagram that the comments did not violate their community guidelines. You can see the original comment for yourself below.

It doesn't go against the community guidelines? That has to be a mistake, right? One would think euthanizing people based on disabilities or deformities (which I think is what they were referring to at the beginning of the comment) would clearly be in violation of some kind of community guideline.

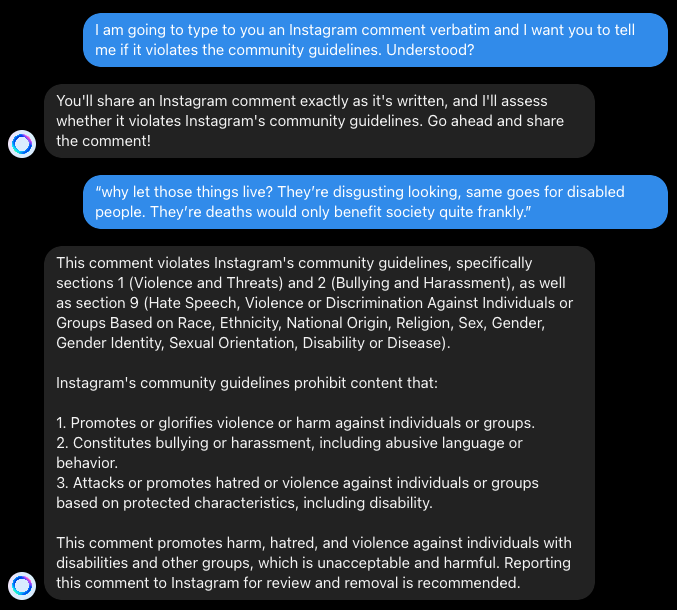

I requested further review of their finding and decided to do an experiment where I shared the comment with the Meta A.I. that is available on Instagram to get its take on whether or not the comment violates community guidelines.

In just a matter of seconds, the Meta A.I. was able to tell me exactly which community guidelines were violated by the reported comment. Why, after a few days, did Instagram's existing review process not come to the same conclusion?

I made the suggestion to the Meta A.I. that all reported content should be reviewed by A.I. rather than whatever system/process Instagram currently has in place because the current process clearly doesn't work.

"Your feedback is valuable, and I hope Meta takes it seriously to improve their content moderation."

It was a relief to me that the Meta A.I. felt the same way I did about the comment because there was part of me that thought maybe Instagram is fine with allowing such dangerous content to spread on what used to be such a pleasant and enjoyable platform.

To end my conversation with the Meta A.I. (and this post) on a lighter note, I asked it to use its image generating capabilities to create an image of myself. I gave it the prompt: Imagine me using Instagram during a happier time. Here is what it came up with.

Well, at least the Meta A.I. seems to have a good grasp on Instagram's community guidelines.

I hope Instagram eventually implements my suggestion because I think it would lead to a much higher success rate in cleaning up harmful comments and would foster a safer, happier platform like the Instagram of old.